The artificial intelligence sector experienced a thunderous resurgence on December 18, 2025, as a "blowout" earnings report from Micron Technology (NASDAQ: MU) effectively silenced skeptics and reignited a massive rally across the semiconductor landscape. After weeks of market anxiety characterized by a "Great Rotation" out of high-growth tech and into value sectors, the narrative has shifted back to the fundamental strength of AI infrastructure. Micron’s shares surged over 14% in mid-day trading, lifting the broader Nasdaq by 450 points and dragging industry titan Nvidia Corporation (NASDAQ: NVDA) up nearly 3% in its wake.

This rally is more than just a momentary spike; it represents a fundamental validation of the AI "memory supercycle." With Micron announcing that its entire production capacity for High Bandwidth Memory (HBM) is already sold out through the end of 2026, the message to Wall Street is clear: the demand for AI hardware is not just sustained—it is accelerating. This development has provided a much-needed confidence boost to investors who feared that the massive capital expenditures of 2024 and early 2025 might lead to a glut of unused capacity. Instead, the industry is grappling with a structural supply crunch that is redefining the value of silicon.

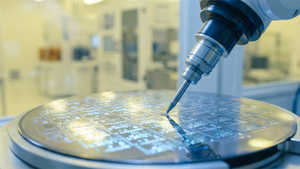

The Silicon Fuel: HBM4 and the Blackwell Ultra Era

The technical catalyst for this rally lies in the rapid evolution of High Bandwidth Memory, the critical "fuel" that allows AI processors to function at peak efficiency. Micron confirmed during its earnings call that its next-generation HBM4 is on track for a high-yield production ramp in the second quarter of 2026. Built on a 1-beta process, Micron’s HBM4 is achieving data transfer speeds exceeding 11 Gbps. This represents a significant leap over the current HBM3E standard, offering the massive bandwidth necessary to feed the next generation of Large Language Models (LLMs) that are now approaching the 100-trillion parameter mark.

Simultaneously, Nvidia is solidifying its dominance with the full-scale production of the Blackwell Ultra GB300 series. The GB300 offers a 1.5x performance boost in AI inferencing over the original Blackwell architecture, largely due to its integration of up to 288GB of HBM3E and early HBM4E samples. This "Ultra" cycle is a strategic pivot by Nvidia to maintain a relentless one-year release cadence, ensuring that competitors like Advanced Micro Devices (NASDAQ: AMD) are constantly chasing a moving target. Industry experts have noted that the Blackwell Ultra’s ability to handle massive context windows for real-time video and multimodal AI is a direct result of this tighter integration between logic and memory.

Initial reactions from the AI research community have been overwhelmingly positive, particularly regarding the thermal efficiency of the new 12- and 16-layer HBM stacks. Unlike previous iterations that struggled with heat dissipation at high clock speeds, the 2025-era HBM4 utilizes advanced molded underfill (MR-MUF) techniques and hybrid bonding. This allows for denser stacking without the thermal throttling that plagued early AI accelerators, enabling the 15-exaflop rack-scale systems that are currently being deployed by cloud giants.

A Three-Way War for Memory Supremacy

The current rally has also clarified the competitive landscape among the "Big Three" memory makers. While SK Hynix (KRX: 000660) remains the market leader with a 55% share of the HBM market, Micron has successfully leapfrogged Samsung Electronics (KRX: 000660) to secure the number two spot in HBM bit shipments. Micron’s strategic advantage in late 2025 stems from its position as the primary U.S.-based supplier, making it a preferred partner for sovereign AI projects and domestic cloud providers looking to de-risk their supply chains.

However, Samsung is mounting a significant comeback. After trailing in the HBM3E race, Samsung has reportedly entered the final qualification stage for its "Custom HBM" for Nvidia’s upcoming Vera Rubin platform. Samsung’s unique "one-stop-shop" strategy—manufacturing both the HBM layers and the logic die in-house—allows it to offer integrated solutions that its competitors cannot. This competition is driving a massive surge in profitability; for the first time in history, memory makers are seeing gross margins approaching 68%, a figure typically reserved for high-end logic designers.

For the tech giants, this supply-constrained environment has created a strategic moat. Companies like Meta (NASDAQ: META) and Amazon (NASDAQ: AMZN) have moved to secure multi-year supply agreements, effectively "pre-buying" the next two years of AI capacity. This has left smaller AI startups and tier-2 cloud providers in a difficult position, as they must now compete for a dwindling pool of unallocated chips or turn to secondary markets where prices for standard DDR5 DRAM have jumped by over 420% due to wafer capacity being diverted to HBM.

The Structural Shift: From Commodity to Strategic Infrastructure

The broader significance of this rally lies in the transformation of the semiconductor industry. Historically, the memory market was a boom-and-bust commodity business. In late 2025, however, memory is being treated as "strategic infrastructure." The "memory wall"—the bottleneck where processor speed outpaces data delivery—has become the primary challenge for AI development. As a result, HBM is no longer just a component; it is the gatekeeper of AI performance.

This shift has profound implications for the global economy. The HBM Total Addressable Market (TAM) is now projected to hit $100 billion by 2028, a milestone reached two years earlier than most analysts predicted in 2024. This rapid expansion suggests that the "AI trade" is not a speculative bubble but a fundamental re-architecting of global computing power. Comparisons to the 1990s internet boom are becoming less frequent, replaced by parallels to the industrialization of electricity or the build-out of the interstate highway system.

Potential concerns remain, particularly regarding the concentration of supply in the hands of three companies and the geopolitical risks associated with manufacturing in East Asia. However, the aggressive expansion of Micron’s domestic manufacturing capabilities and Samsung’s diversification of packaging sites have partially mitigated these fears. The market's reaction on December 18 indicates that, for now, the appetite for growth far outweighs the fear of overextension.

The Road to Rubin and the 15-Exaflop Future

Looking ahead, the roadmap for 2026 and 2027 is already coming into focus. Nvidia’s Vera Rubin architecture, slated for a late 2026 release, is expected to provide a 3x performance leap over Blackwell. Powered by new R100 GPUs and custom ARM-based CPUs, Rubin will be the first platform designed from the ground up for HBM4. Experts predict that the transition to Rubin will mark the beginning of the "Physical AI" era, where models are large enough and fast enough to power sophisticated humanoid robotics and autonomous industrial fleets in real-time.

AMD is also preparing its response with the MI400 series, which promises a staggering 432GB of HBM4 per GPU. By positioning itself as the leader in memory capacity, AMD is targeting the massive LLM inference market, where the ability to fit a model entirely on-chip is more critical than raw compute cycles. The challenge for both companies will be securing enough 3nm and 2nm wafer capacity from TSMC to meet the insatiable demand.

In the near term, the industry will focus on the "Sovereign AI" trend, as nation-states begin to build out their own independent AI clusters. This will likely lead to a secondary "mini-cycle" of demand that is decoupled from the spending of U.S. hyperscalers, providing a safety net for chipmakers if domestic commercial demand ever starts to cool.

Conclusion: The AI Trade is Back for the Long Haul

The mid-december rally of 2025 has served as a definitive turning point for the tech sector. By delivering record-breaking earnings and a "sold-out" outlook, Micron has provided the empirical evidence needed to sustain the AI bull market. The synergy between Micron’s memory breakthroughs and Nvidia’s relentless architectural innovation has created a feedback loop that continues to defy traditional market cycles.

This development is a landmark in AI history, marking the moment when the industry moved past the "proof of concept" phase and into a period of mature, structural growth. The AI trade is no longer about the potential of what might happen; it is about the reality of what is being built. Investors should watch closely for the first HBM4 qualification results in early 2026 and any shifts in capital expenditure guidance from the major cloud providers. For now, the "AI Chip Rally" suggests that the foundation of the digital future is being laid in silicon, and the builders are working at full capacity.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.

Disclaimer: The dates and events described in this article are based on the user-provided context of December 18, 2025.