As the calendar turns to late January 2026, the artificial intelligence industry is witnessing a tectonic shift in its hardware foundation. Samsung Electronics Co., Ltd. (KRX: 005930) and SK Hynix Inc. (KRX: 000660) have officially signaled the start of the HBM4 mass production phase, a move that promises to shatter the "memory wall" that has long constrained the scaling of massive large language models. This transition marks the most significant architectural overhaul in high-bandwidth memory history, moving from the incremental improvements of HBM3E to a radically more powerful and efficient 2048-bit interface.

The immediate significance of this milestone cannot be overstated. With the HBM market forecast to grow by a staggering 58% to reach $54.6 billion in 2026, the arrival of HBM4 is the oxygen for a new generation of AI accelerators. Samsung has secured a major strategic victory by clearing final qualification with both NVIDIA Corporation (NASDAQ: NVDA) and Advanced Micro Devices, Inc. (NASDAQ: AMD), ensuring that the upcoming "Rubin" and "Instinct MI400" series will have the necessary memory bandwidth to fuel the next leap in generative AI capabilities.

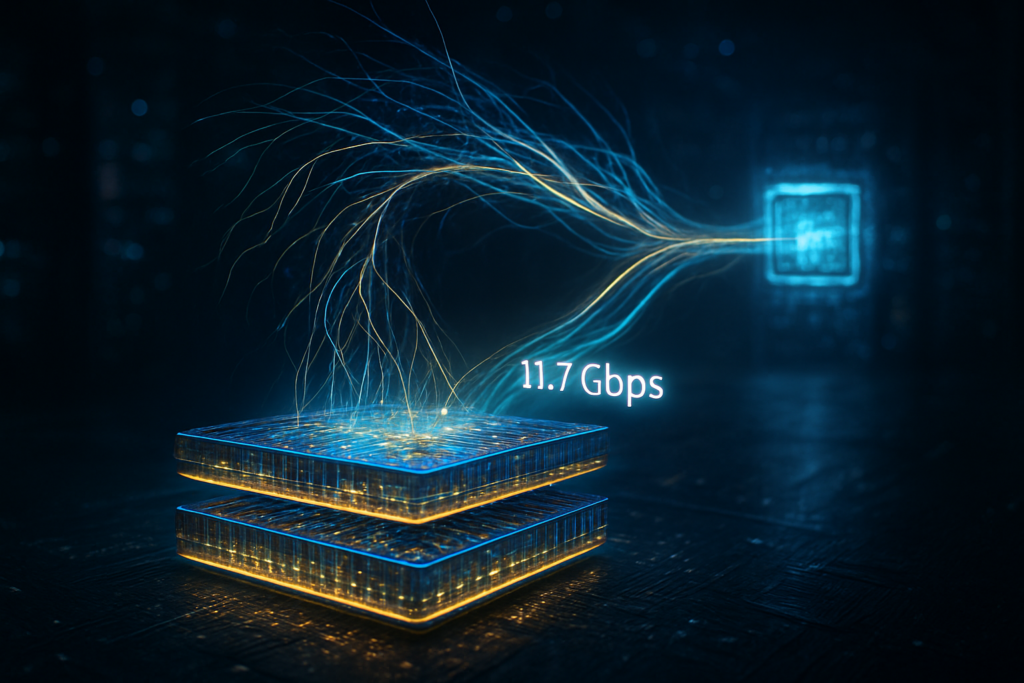

Technical Superiority and the Leap to 11.7 Gbps

Samsung’s HBM4 entry is characterized by a significant performance jump, with shipments scheduled to begin in February 2026. The company’s latest modules have achieved blistering data transfer speeds of up to 11.7 Gbps, surpassing the 10 Gbps benchmark originally set by industry leaders. This performance is achieved through the adoption of a sixth-generation 10nm-class (1c) DRAM process combined with an in-house 4nm foundry logic die. By integrating the logic die and memory production under one roof, Samsung has optimized the vertical interconnects to reduce latency and power consumption, a critical factor for data centers already struggling with massive energy demands.

In parallel, SK Hynix has utilized the recent CES 2026 stage to showcase its own engineering marvel: the industry’s first 16-layer HBM4 stack with a 48 GB capacity. While Samsung is leading with immediate volume shipments of 12-layer stacks in February, SK Hynix is doubling down on density, targeting mass production of its 16-layer variant by Q3 2026. This 16-layer stack utilizes advanced MR-MUF (Mass Reflow Molded Underfill) technology to manage the extreme thermal dissipation required when stacking 16 high-performance dies. Furthermore, SK Hynix’s collaboration with Taiwan Semiconductor Manufacturing Co. (NYSE: TSM) for the logic base die has turned the memory stack into an active co-processor, effectively allowing the memory to handle basic data operations before they even reach the GPU.

This new generation of memory differs fundamentally from HBM3E by doubling the number of I/Os from 1024 to 2048 per stack. This wider interface allows for massive bandwidth even at lower clock speeds, which is essential for maintaining power efficiency. Initial reactions from the AI research community suggest that HBM4 will be the "secret sauce" that enables real-time inference for trillion-parameter models, which previously required cumbersome and slow multi-GPU swapping techniques.

Strategic Maneuvers and the Battle for AI Dominance

The successful qualification of Samsung’s HBM4 by NVIDIA and AMD reshapes the competitive landscape of the semiconductor industry. For NVIDIA, the availability of high-yield HBM4 is the final piece of the puzzle for its "Rubin" architecture. Each Rubin GPU is expected to feature eight stacks of HBM4, providing a total of 288 GB of high-speed memory and an aggregate bandwidth exceeding 22 TB/s. By diversifying its supply chain to include both Samsung and SK Hynix—and potentially Micron Technology, Inc. (NASDAQ: MU)—NVIDIA secures its production timelines against the backdrop of insatiable global demand.

For Samsung, this moment represents a triumphant return to form after a challenging HBM3E cycle. By clearing NVIDIA’s rigorous qualification process ahead of schedule, Samsung has positioned itself to capture a significant portion of the $54.6 billion market. This rivalry benefits the broader ecosystem; the intense competition between the South Korean giants is driving down the cost per gigabyte of high-end memory, which may eventually lower the barrier to entry for smaller AI labs and startups that rely on renting cloud-based GPU clusters.

Existing products, particularly those based on the HBM3E standard, are expected to see a rapid transition to "legacy" status for flagship enterprise applications. While HBM3E will remain relevant for mid-range AI tasks and edge computing, the high-end training market is already pivoting toward HBM4-exclusive designs. This creates a strategic advantage for companies that have secured early allocations of the new memory, potentially widening the gap between "compute-rich" tech giants and "compute-poor" competitors.

The Broader AI Landscape: Breaking the Memory Wall

The rise of HBM4 fits into a broader trend of "system-level" AI optimization. As GPU compute power has historically outpaced memory bandwidth, the industry hit a "memory wall" where the processor would sit idle waiting for data. HBM4 effectively smashes this wall, allowing for a more balanced architecture. This milestone is comparable to the introduction of multi-core processing in the mid-2000s; it is not just an incremental speed boost, but a fundamental change in how data moves within a machine.

However, the rapid growth also brings concerns. The projected 58% market growth highlights the extreme concentration of capital and resources in the AI hardware sector. There are growing worries about over-reliance on a few key manufacturers and the geopolitical risks associated with semiconductor production in East Asia. Moreover, the energy intensity of HBM4, while more efficient per bit than its predecessors, still contributes to the massive carbon footprint of modern AI factories.

When compared to previous milestones like the introduction of the H100 GPU, the HBM4 era represents a shift toward specialized, heterogeneous computing. We are moving away from general-purpose accelerators toward highly customized "AI super-chips" where memory, logic, and interconnects are co-designed and co-manufactured.

Future Horizons: Beyond the 16-Layer Barrier

Looking ahead, the roadmap for high-bandwidth memory is already extending toward HBM4E and "Custom HBM." Experts predict that by 2027, the industry will see the integration of specialized AI processing units directly into the HBM logic die, a concept known as Processing-in-Memory (PIM). This would allow AI models to perform certain calculations within the memory itself, further reducing data movement and power consumption.

The potential applications on the horizon are vast. With the massive capacity of 16-layer HBM4, we may soon see "World Models"—AI that can simulate complex physical environments in real-time for robotics and autonomous vehicles—running on a single workstation rather than a massive server farm. The primary challenge remains yield; manufacturing a 16-layer stack with zero defects is an incredibly complex task, and any production hiccups could lead to supply shortages later in 2026.

A New Chapter in Computational Power

The mass production of HBM4 by Samsung and SK Hynix marks a definitive new chapter in the history of artificial intelligence. By delivering unprecedented bandwidth and capacity, these companies are providing the raw materials necessary for the next stage of AI evolution. The transition to a 2048-bit interface and the integration of advanced logic dies represent a crowning achievement in semiconductor engineering, signaling that the hardware industry is keeping pace with the rapid-fire innovations in software and model architecture.

In the coming weeks, the industry will be watching for the first "Rubin" silicon benchmarks and the stabilization of Samsung’s February shipment yields. As the $54.6 billion market continues to expand, the success of these HBM4 rollouts will dictate the pace of AI progress for the remainder of the decade. For now, the "memory wall" has been breached, and the road to more powerful, more efficient AI is wider than ever before.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.